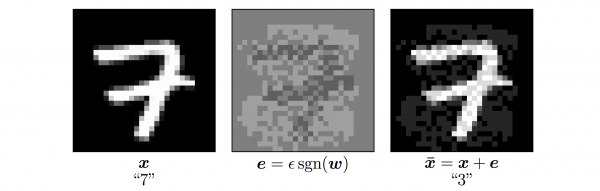

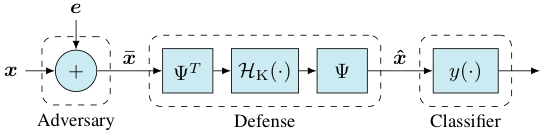

- Deep neural networks are vulnerable to small adversarial perturbations in data. We analyze defenses that exploit sparsity in natural data.

- We show that sparsity-based defenses are provably effective for linear classifers, and then extend our approach to deep networks.

Students

Soorya Gopalakrishnan, Zhinus Marzi

Faculty

Upamanyu Madhow, Ramtin Pedarsani