Deep Illusion Adversarial ML Library

Deep Illusion is a toolbox for adversarial attacks in machine learning. The current version is only implemented for Pytorch models. DeepIllusion is a growing and developing python module which aims to help the adversarial machine learning community to accelerate their research. The module currently includes a complete implementation of well-known attacks (PGD, FGSM, R-FGSM, CW, BIM, etc..). All attacks have an apex(amp) version which you can run your attacks fast and accurately. We strongly recommend that amp versions should only be used for adversarial training since it may have gradient masking issues after the neural net gets confident about its decisions. All attack methods have an option (Verbose: False) to check if gradient masking is happening.

All attack codes are written in functional programming style, therefore, users can easily call the method function and feed the input data and model to get perturbations. All codes are documented and contain the example used in their description. Users can easily access the documentation by typing "??" at the and of the method, they want to use in Ipython (E.g FGSM?? or PGD??). Output perturbations are already clipped for each image to prevent illegal pixel values. We are open to contributors to expand the attack methods arsenal.

We also include the most effective current approach to defend DNNs against adversarial perturbations which is training the network using adversarially perturbed examples. Adversarial training and testing methods are included in the torch defenses submodule.

The current version is tested with different defense methods and the standard models for verification and we observed the reported accuracies.

Maintainers: Metehan Cekic, Can Bakiskan, Soorya Gopal, Ahmet Dundar Sezer

Source code: https://github.com/metehancekic/deep-illusion

A Neuro-Inspired Autoencoding Defense Against Adversarial Perturbations

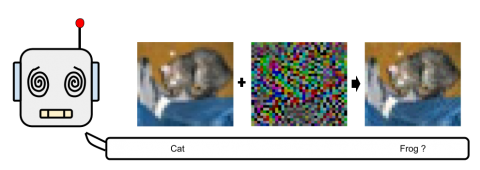

In this work we explore a neuro-inspired way to mitigate adversarial attacks on machine learning models. Specifically, we use sparse and overcomplete representations, conjectured to be characteristic of the visual system, lateral inhibition, synaptic noise and drastic nonlinearity, and show that an initial stage which uses these principles provides robustness against adversarial attacks comparable to adversarial training.

Source code: https://github.com/canbakiskan/neuro-inspired-defense

Related publications:

Polarizing Front Ends for Robust CNNs

Source code: https://github.com/canbakiskan/polarizing-frontend

Related publications: