Adversarial Machine Learning

Deep Illusion Adversarial ML Library

Source code: https://github.com/metehancekic/deep-illusion

Deep Illusion is a toolbox for adversarial attacks in machine learning. The current version is only implemented for Pytorch models. DeepIllusion is a growing and developing python module which aims to help the adversarial machine learning community to accelerate their research. The module currently includes a complete implementation of well-known attacks (PGD, FGSM, R-FGSM, CW, BIM, etc..). All attacks have an apex(amp) version which you can run your attacks fast and accurately. We strongly recommend that amp versions should only be used for adversarial training since it may have gradient masking issues after the neural net gets confident about its decisions. All attack methods have an option (Verbose: False) to check if gradient masking is happening.

All attack codes are written in functional programming style, therefore, users can easily call the method function and feed the input data and model to get perturbations. All codes are documented and contain the example used in their description. Users can easily access the documentation by typing "??" at the and of the method, they want to use in Ipython (E.g FGSM?? or PGD??). Output perturbations are already clipped for each image to prevent illegal pixel values. We are open to contributors to expand the attack methods arsenal.

We also include the most effective current approach to defend DNNs against adversarial perturbations which is training the network using adversarially perturbed examples. Adversarial training and testing methods are included in the torch defenses submodule.

The current version is tested with different defense methods and the standard models for verification and we observed the reported accuracies.

Maintainers:Metehan Cekic, Can Bakiskan, Soorya Gopal, Ahmet Dundar Sezer

Link to source code: https://github.com/metehancekic/deep-illusion

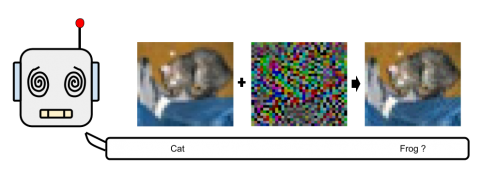

A Neuro-Inspired Autoencoding Defense Against Adversarial Perturbations

Source code: https://github.com/canbakiskan/neuro-inspired-defense

Related publications:

Polarizing Front Ends for Robust CNNs

Source code: https://github.com/canbakiskan/polarizing-frontend

Related publications:

Wireless Fingerprinting

Robust Wireless Fingerprinting: Generalizing Across Space and Time

- Our goal is to learn RF signatures that can distinguish between devices sending exactly the same message. This is possible due to subtle hardware imperfections (labeled "nonlinearities" in the figure below) unique to each device.

- Since the information in RF data resides in complex baseband, we employ CNNs with complex-valued weights to learn these signatures. This technique does not use signal domain knowledge and can be used for any wireless protocol. We demonstrate its effectiveness for two protocols - WiFi and ADS-B.

- We show that this approach is vulnerable to spoofing when using the entire packet: the CNN focuses on fields containing ID info (eg. MAC ID in WiFi) which can be easily spoofed. When using the preamble alone, reasonably high accuracies are obtained, and performance is significantly enhanced by noise augmentation.

- We also study robustness to confounding factors in data collected over multiple days and locations, such as the carrier frequency offset (CFO), which drifts over time, and the wireless channel, which depends on the propagation environment. We show that carefully designed data augmentation is critical for learning robust wireless signatures.

This repository contains scripts to simulate the effect of channel and CFO variations on wireless fingerprinting using complex-valued CNNs. This repo also has a simulation-based dataset based on models of some typical nonlinearities. It includes augmentation techniques, estimation techniques, and combinations of the two. The repository consists of 4 folders; namely, cxnn, preproc, tests, data, and a number of scripts outside of these folders (in the main folder).

Source code: https://github.com/metehancekic/wireless-fingerprinting

Simulated Dataset

We have created a simulation-based WiFi dataset based on models of some typical nonlinearities. We implement two different kinds of circuit-level impairments: I/Q imbalance and power amplifier nonlinearity. The training dataset consists of 200 signals per device for 19 devices (classes). The validation and test sets contain 100 signals per device. Overall, the dataset contains 3800 signals for training, 1900 signals for validation, and 1900 signals for the test set. The dataset can be downloaded as an npz file from this Box link and needs to be copied into the data subdirectory.

Related publications: