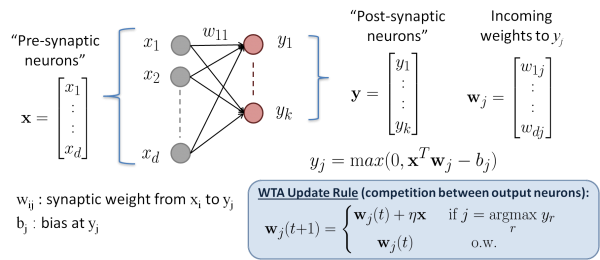

Hebbian learning, based on the simple “fire together wire together” model, is ubiquitous in the world of neuroscience as the fundamental principle for learning in the brain. However so far it has found limited applicability in the field of machine learning as an algorithm for training neural nets. The field of unsupervised and semi-supervised learning becomes increasingly relevant due to easy access to large amounts of unlabelled data. We feel Hebbian learning can play a crucial role in the development of this field as it offers a simple, intuitive and neuro-plausible way for unsupervised learning. In this work we explore how to adapt Hebbian learning for training deep neural networks. In particular, we develop algorithms around the core idea of competitive Hebbian learning while enforcing that the neural codes display the vital properties of sparsity, decorrelation and distributed-ness.

As an example we demonstrate the efficiency of our algorithms by training a deep convolutional network for image recognition. The network is trained in a bottom up fashion (as opposed to top down back propagation based training) using a combination of supervision for higher layers and unsupervised lower layers, both using Hebbian learning.

Students

Aseem Wadhwa

Faculty

Upamanyu Madhow